I recently completed Certified Scrum Master training with Mishkin Berteig. One of the many interesting tidbits that came up over the course of the weekend was Mishkin's preference for treating every bug as the team's #1 priority as soon as it is discovered. (This is similar to the stop-the-line mentality of lean development.) I found this interesting, because it goes against the Scrum practice of putting decisions about what the team should work on in the hands of the Product Owner.

I also disagree with giving the Product Owner total control of what to work on, because I believe developers are in a better position to appreciate the real impact of bugs on the product, and it's best to put decisions in the hands of the people best able to understand the consequences. If the development team thinks a bug needs to be fixed pronto, it should be fixed pronto.

Unfortunately, even amongst development teams, there is not always consensus about the real cost of letting bugs linger. Many developers don't mind living with bugs while they continue to pump out new features, figuring they will get back to them "later". In the hopes of changing this situation, I'd like to talk about a hidden cost of leaving bugs in the code.

The Hidden Cost

Of course, there is the standard argument that the later a bug is fixed, the more expensive it is to fix. I'm going to assume that everyone has heard that argument before, and more-or-less agrees with it. I want to talk about something a little more insidious. When you fix a bug not only affects the cost of fixing it, but also the way you fix it.

As development work continues in the presence of a known bug, new dependencies on the code where the bug lives are likely to be added. Sometimes these dependencies will be on bug itself. Often, this happens without the developers realizing it. In general, as a piece of code acquires dependencies, it becomes riskier to change. I think this is even more true of bug-ridden code.

So, the longer you wait before fixing the bug, the more risk is introduced in fixing it. When the risk of fixing a bug increases, the reaction of most developers is to stick with the potential fix that appears least risky (even if there is a 'better' alternative).

A Brief Aside

Okay, I've used a dangerous word: 'better'. Anytime someone uses evaluative words like 'good', 'bad', 'better', or 'worse' without qualifying them with a context, I get suspicious. So here's my context:

Kent Beck makes a distinction between code quality and code health in this talk. I think this is an important distinction to make. Code quality relates to the number of bugs in your code, and how well it does what it's supposed to do. Code health refers to your code's ability to react to change. It relates to how well-factored your code it. You can just call it maintainability if you want.

The reason this distinction is important is that its possible to improve code quality without improving code health. In fact, it's quite easy to improve code quality while reducing code health. Since code health is extremely desirable, I would assert that a fix that improves code quality and code health is better than one that improves code quality but reduces code health.

Back to Our Regularly Scheduled Program

Now we're getting down to the point. When you put off a bug fix, you are going to feel pressure to go with the (perceived) less risky solution later (the quick hack that fixes the bug but reduces code health). And lets face it, most of us are not good with dealing with pressure. If we were, we would probably insist on fixing the bug now rather than later in the first place.

So not only are you going to take longer to fix the bug later, you're going to take on technical debt, and all the pain and suffering (and reduced velocity) that technical debt entails.

Thursday, December 21, 2006

Wednesday, November 15, 2006

Let the Experts Make the Decisions

In many traditional organizations, developers end up making business decisions that they have no right making. Maybe they are not able to get timely feedback from a business expert. Maybe they are so 'sure' of the answer they just don't bother asking. Maybe they are so knee deep in technical details they don't realize they are making a business decision. If they make the wrong choice, this can have some pretty nasty consequences for the business in question.

As a result, agile practitioners have a strong focus on putting business decisions in the hands of business people. The business expert (product owner in Scrum lingo, customer in XP lingo):

Too much of a good thing

Sometimes, I see an agile team give the business expert too much control. I know, that sounds like heresy. Too much control in the hands of the customer? Is there such a thing? I think there is. Hear me out.

The reason agile practitioners put business decisions in the hands of business people is pretty obvious: they are the experts. They have the deepest understanding of the business needs. They are best able to understand the relevant consequences of the decision. As a result, they are in the best position to make the call.

For some decisions, though, the developers are the ones best able to understand the relevant consequences. I am not talking about most "technical" decisions. If you can easily translate the technical consequences to business consequences, it's not really a technical decision. When that's the case, explain the consequences in business terms, and let the business expert decide.

So what am I talking about?

I'm talking about decisions regarding software development process and practices. Consequences of these decisions are not clear, obvious, and easily comparable. They are subtle and complex. They require experience and expertise to understand. These decisions should not be left in the hands of a novice, and when it comes to software development, chances are your business expert is a novice.

Consider this exchange:

Bob the Developer: "Hi Jim, I wanted to ask you something. I'm working on feature x, and I've noticed that it would really help if we refactored the code we wrote when we implemented feature y. It's kinda becoming a mess."

Jim the Business Expert (looking confused): "I've heard you guys talk about refactoring before, but I'm not really sure what you mean... do you mean you'll have to go back and fix the code for feature y? I thought we were done with that one."

Bob: "Well, the feature is working fine, but we took some shortcuts in the code. It's making it hard to work in that part of the code now. It's going to be a real problem as we move forward."

Jim: "Hmm. How long will it take?"

Bob: "It'll take about half a day to refactor the existing code and add the new feature."

Jim: "Can you add the new feature without doing this refactoring?"

Bob: "Hmmm. Well, I'd rather not, but I think we could hack it in. Doing it that way would take about an hour."

Jim: "Well... we have a lot of features to work on, and marketing is expecting the first release by June. I think you should do it the quick way. I'd rather not waste a few hours on refactoring if it's not really necessary."

Whether or not to refactor as you develop a new feature is not a decision that should be posed to a business expert. It takes a fair amount of software development experience and expertise to understand the effects of refactoring vs. not refactoring. As developers, we are the experts when it comes to software development process and practices. We should recognize that, and act accordingly.

I like how James Shore puts it:

Letting the customer steer the project is great, but don't give them the opportunity to steer it into the ground by making inappropriate technical decisions. Stand firm when it comes to responsible development practices. Use your best judgement. Remember, you're the expert.

As a result, agile practitioners have a strong focus on putting business decisions in the hands of business people. The business expert (product owner in Scrum lingo, customer in XP lingo):

- prioritizes features

- provides feedback on working software on a regular, frequent basis

- defines acceptance criteria for features

- remains available to work with developers and answer questions to ensure the features under development are meeting business needs.

Too much of a good thing

Sometimes, I see an agile team give the business expert too much control. I know, that sounds like heresy. Too much control in the hands of the customer? Is there such a thing? I think there is. Hear me out.

The reason agile practitioners put business decisions in the hands of business people is pretty obvious: they are the experts. They have the deepest understanding of the business needs. They are best able to understand the relevant consequences of the decision. As a result, they are in the best position to make the call.

For some decisions, though, the developers are the ones best able to understand the relevant consequences. I am not talking about most "technical" decisions. If you can easily translate the technical consequences to business consequences, it's not really a technical decision. When that's the case, explain the consequences in business terms, and let the business expert decide.

So what am I talking about?

I'm talking about decisions regarding software development process and practices. Consequences of these decisions are not clear, obvious, and easily comparable. They are subtle and complex. They require experience and expertise to understand. These decisions should not be left in the hands of a novice, and when it comes to software development, chances are your business expert is a novice.

Consider this exchange:

Bob the Developer: "Hi Jim, I wanted to ask you something. I'm working on feature x, and I've noticed that it would really help if we refactored the code we wrote when we implemented feature y. It's kinda becoming a mess."

Jim the Business Expert (looking confused): "I've heard you guys talk about refactoring before, but I'm not really sure what you mean... do you mean you'll have to go back and fix the code for feature y? I thought we were done with that one."

Bob: "Well, the feature is working fine, but we took some shortcuts in the code. It's making it hard to work in that part of the code now. It's going to be a real problem as we move forward."

Jim: "Hmm. How long will it take?"

Bob: "It'll take about half a day to refactor the existing code and add the new feature."

Jim: "Can you add the new feature without doing this refactoring?"

Bob: "Hmmm. Well, I'd rather not, but I think we could hack it in. Doing it that way would take about an hour."

Jim: "Well... we have a lot of features to work on, and marketing is expecting the first release by June. I think you should do it the quick way. I'd rather not waste a few hours on refactoring if it's not really necessary."

Whether or not to refactor as you develop a new feature is not a decision that should be posed to a business expert. It takes a fair amount of software development experience and expertise to understand the effects of refactoring vs. not refactoring. As developers, we are the experts when it comes to software development process and practices. We should recognize that, and act accordingly.

I like how James Shore puts it:

"If a technical option simply isn't appropriate, don't mention it, or mention your decision in passing as part of the cost of doing business."

Letting the customer steer the project is great, but don't give them the opportunity to steer it into the ground by making inappropriate technical decisions. Stand firm when it comes to responsible development practices. Use your best judgement. Remember, you're the expert.

Wednesday, October 18, 2006

Trust vs. Camaraderie

I was watching the movie Goodfellas a few nights ago, and a scene towards the end got me thinking. In the scene, Ray Liotta's character Henry is on bail after being busted by federal agents. He's having lunch with Robert De Niro's character Jimmy. Henry is trying to figure out if Jimmy's going to have him killed, and Jimmy is wondering if Henry is going to turn him over to the feds. Meanwhile, by all outward appearances, they're having a perfectly normal, friendly conversation.

Obviously this is an extreme example of the difference between camaraderie and trust. In particular, it shows that a relationship can have a high degree of camaraderie and a low degree of trust. Why is this important? Because teams that have camaraderie but not trust often don't realize they have a problem.

Why do we care about trust?

Trust is a wonderful thing. When a team has a high level of trust, they don't waste time with political maneuvering. They don't hold back suggestions that can help the team improve. Team members aren't afraid to ask for help, and are willing to help each other. They spend time collaborating to create solutions rather than arguing over details. When a team has a high level of trust, goals of individuals are aligned with goals of the team. There are no distractions. Productivity can soar.

When a team doesn't have trust, it's not a team at all. It's a group of individuals, each with seperate and often conflicting goals. This wastes time and reduces productivity.

Why do so many teams have camaraderie but not trust?

Building camaraderie is easy. Socializing is easier for some than for others, but if you put a bunch of people in a room together all day, day after day, soon enough they will be comfortable chatting and joking around. Building camaraderie does not require commitment.

Building trust does. It requires commitment both from individuals, and from the organization. Individuals must remember to give their co-workers the benefit of the doubt, always. Beware of these detrimental trains of thought (in yourself, not your co-workers). In particular point number 2:

Remember, your perception colors everything you experience. If you only give someone as much benefit of the doubt as you think they're giving you, you'll end up in a downward spiral. Trust begets trust; lack of trust begets lack of trust. So trust people more than you think they deserve. Usually you won't be disappointed.

The hard part

As I mentioned, building trust also requires commitment from the organization. A culture of trust cannot co-exist with a culture of blame. People can only trust each other when they are not worried about who is trying to shift future potential blame to them, and who they can shift it to.

Unfortunately, a culture of blame is the standard in most large organizations. When something goes wrong, the first question in such organizations is "Who screwed up?". This is not a useful question, because discovering the answer doesn't help us understand why the problem happened, and how we can prevent it in the future. It doesn't help the organization improve*.

A better question is "What can we learn from this mistake?". This is a useful question, because discovering the answer practically forces the enterprise to improve. It makes us think about the aspects of the system that allowed the problem to occur, and what changes we can make to make it harder for the problem to occur again. It treats a mistake as a learning oppertunity. More importantly, by removing the emphasis on blame, it makes a culture of trust possible.

* Not only that, it's also a trick question, because it implies there is an individual source of the problem. Most problems in a complex enterprise do not have an individual source. Assuming they do is useless at best, and more often downright harmful.

Obviously this is an extreme example of the difference between camaraderie and trust. In particular, it shows that a relationship can have a high degree of camaraderie and a low degree of trust. Why is this important? Because teams that have camaraderie but not trust often don't realize they have a problem.

Why do we care about trust?

Trust is a wonderful thing. When a team has a high level of trust, they don't waste time with political maneuvering. They don't hold back suggestions that can help the team improve. Team members aren't afraid to ask for help, and are willing to help each other. They spend time collaborating to create solutions rather than arguing over details. When a team has a high level of trust, goals of individuals are aligned with goals of the team. There are no distractions. Productivity can soar.

When a team doesn't have trust, it's not a team at all. It's a group of individuals, each with seperate and often conflicting goals. This wastes time and reduces productivity.

Why do so many teams have camaraderie but not trust?

Building camaraderie is easy. Socializing is easier for some than for others, but if you put a bunch of people in a room together all day, day after day, soon enough they will be comfortable chatting and joking around. Building camaraderie does not require commitment.

Building trust does. It requires commitment both from individuals, and from the organization. Individuals must remember to give their co-workers the benefit of the doubt, always. Beware of these detrimental trains of thought (in yourself, not your co-workers). In particular point number 2:

In a study conducted by Sukhwinder Shergill and colleagues at University College London, pairs of volunteers were connected to a device that allowed each of them to exert pressure on the other volunteer’s fingers. The researcher began by exerting a fixed amount of pressure on the first volunteer’s finger. The first volunteer was then asked to exert the same amount of pressure on the second volunteer’s finger. The second volunteer was then asked to exert the same amount of pressure on the first volunteer’s finger, and so on. Although volunteers tried to respond with equal force, they typically responded with about 40 percent more force than they had just experienced. Each time a volunteer was touched, he touched back harder, which led the other volunteer to touch back even harder. Is this why parties in a conflict invariably think they are both "right"?

Remember, your perception colors everything you experience. If you only give someone as much benefit of the doubt as you think they're giving you, you'll end up in a downward spiral. Trust begets trust; lack of trust begets lack of trust. So trust people more than you think they deserve. Usually you won't be disappointed.

The hard part

As I mentioned, building trust also requires commitment from the organization. A culture of trust cannot co-exist with a culture of blame. People can only trust each other when they are not worried about who is trying to shift future potential blame to them, and who they can shift it to.

Unfortunately, a culture of blame is the standard in most large organizations. When something goes wrong, the first question in such organizations is "Who screwed up?". This is not a useful question, because discovering the answer doesn't help us understand why the problem happened, and how we can prevent it in the future. It doesn't help the organization improve*.

A better question is "What can we learn from this mistake?". This is a useful question, because discovering the answer practically forces the enterprise to improve. It makes us think about the aspects of the system that allowed the problem to occur, and what changes we can make to make it harder for the problem to occur again. It treats a mistake as a learning oppertunity. More importantly, by removing the emphasis on blame, it makes a culture of trust possible.

* Not only that, it's also a trick question, because it implies there is an individual source of the problem. Most problems in a complex enterprise do not have an individual source. Assuming they do is useless at best, and more often downright harmful.

Wednesday, October 04, 2006

On Stevey on Agile

The blogosphere is abuzz about Steve Yegge's condemnation of what he calls 'Bad Agile' (that is, agile anywhere except Google). Everyone has been contributing their two cents worth, and there have been some interesting responses. The best one I've seen so far comes from Jeff Atwood over at Coding Horror:

Someone on the XP mailing list suggested that Steve just "doesn't get it". I disagree. I think he gets it. In fact, he gets it so well that he doesn't need our help. He's already agile. And that's great, even if he doesn't want to fly the (capital-A) Agile flag.

There are really two problems:

1) There are people in the Agile community so excited about their chosen methodology or approach that they start treating it like a Golden Hammer. This is not agile.

Not everyone who isn't doing Scrum/XP/Crystal/whatever is doing BDUF/Waterfall. There are lots of folks out there who are working in a agile way, but not practicing any given 'methodology'. For example, I would categorize the Eclipse Foundation as agile, but they don't use any spefic methodology; they use a mix of practices that works for them. If they are happy and successful, why push XP (or any other methodology) on them? Even if you sincerely believe XP will help them, pushing it on them is not only condescending, it's not agile!

2) Steve (and other people like him who are extremely effective with their natural agile approach) rails so strongly against Agile that he will scare everyone away from it, whether it would be useful to them or not. That's a shame. This is the point Jeff at Coding Horror makes, and I couldn't agree more.

"The whole FogCreek/Google-Is-So-Totally-Awesome thing is an annoyance. But I have a deeper problem with this post. I think Steve's criticisms of agile are hysterical and misplaced; attacking Agile is a waste of time because most developers haven't even gotten there yet! The real enemy isn't Agile, it's Waterfall and Big Design Up Front. Even "bad" Agile is a huge quality of life improvement for developers stuck in the dark ages of BDUF. I know because I've been there."This comes very close to hitting the focal point of this whole debate, I think. I've noticed a pattern in the high-profile bloggers/developers who've taken a very poor view of Agile: it seems that someone has tried to push a particular Agile methodology on them even though they had no pain for it to solve. It seems to me that Joel and Steve already have their own versions of agile development, and they are doing quite well with them. They didn't need XP, Scrum, or any other Agile methodology. So, naturally, they can't help but think Agile is a load of crap designed to make money for consultants.

Someone on the XP mailing list suggested that Steve just "doesn't get it". I disagree. I think he gets it. In fact, he gets it so well that he doesn't need our help. He's already agile. And that's great, even if he doesn't want to fly the (capital-A) Agile flag.

There are really two problems:

1) There are people in the Agile community so excited about their chosen methodology or approach that they start treating it like a Golden Hammer. This is not agile.

Not everyone who isn't doing Scrum/XP/Crystal/whatever is doing BDUF/Waterfall. There are lots of folks out there who are working in a agile way, but not practicing any given 'methodology'. For example, I would categorize the Eclipse Foundation as agile, but they don't use any spefic methodology; they use a mix of practices that works for them. If they are happy and successful, why push XP (or any other methodology) on them? Even if you sincerely believe XP will help them, pushing it on them is not only condescending, it's not agile!

2) Steve (and other people like him who are extremely effective with their natural agile approach) rails so strongly against Agile that he will scare everyone away from it, whether it would be useful to them or not. That's a shame. This is the point Jeff at Coding Horror makes, and I couldn't agree more.

Tuesday, September 26, 2006

Scrum Pitfall I: Focusing on People Instead of Features

We have been using Scrum since I joined the team at the end of January. Over the course of the last 8 months, we have started using several XP practices. It took a long time, but we've finally gotten to the point of using a story card wall. Well, at this point it's actually a task card wall, but it's a start :). (For those not familiar with Extreme Programming, or XP, a story card wall is a big wall used to visibly track progress of user stories throughout an iteration. User stories are written on index cards, which are placed on the wall. The location of each card on the wall provides information about its status). It's caused an almost unbelievable improvement in our communication, productivity, and visibility into problems.

Why was there such a big difference? I'll get to that in a minute, but first, a little intro to Scrum:

For those not familiar with Scrum, the daily scrum is stand-up meeting where each participant answers three questions:

1) What did you do yesterday?

2) What will you do today?

3) What is blocking progress?

It is intended to allow the team to work together to remove obstacles blocking progress and keep momentum during the iteration.

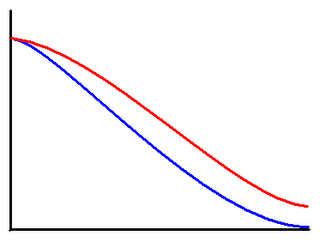

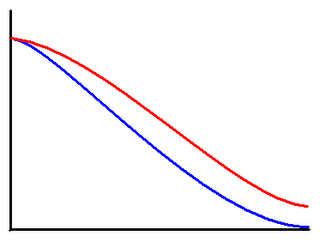

The burndown is a graph of the amount of estimated work remaining over the course of an iteration. The days of the iteration are written on the x axis, and the amount of remaining work is measured on the y axis. Each day, a new data point is added to the graph. The amount of work remaining is calculated from an accompanying list of tasks and time estimates. The time estimate for each task is updated daily.

We were getting very little value from these two tools. But why?

As I suspect is the case in many large organizations adopting Scrum for the first time, our Scrum had degenerated into a status report rather than a tool to resolve problems and increase productivity. We often forgot to mention what we would be working on in the upcoming day, and hardly anyone ever admitted to being blocked. Most of the time, we just reported what we had been working on.

As for the burndown, we had developed a habit of ending every iteration with only slight slippage from the 'ideal burndown'. Here's an example of what our burndown graphs almost always looked like:

The blue line is an ideal burndown. Notice it reaches zero at the end of the iteration. The red line is our typical burndown. Doesn't look too bad, right? The problem was, even though there weren't many hours left on the burndown at the end of each iteration, there would be lots of incomplete tasks. We would always have a lot of tasks "almost done" and very few actually done.

The blue line is an ideal burndown. Notice it reaches zero at the end of the iteration. The red line is our typical burndown. Doesn't look too bad, right? The problem was, even though there weren't many hours left on the burndown at the end of each iteration, there would be lots of incomplete tasks. We would always have a lot of tasks "almost done" and very few actually done.

There were three main deficiencies in our current process that caused this problem:

1) The burndown was deceiving. It didn't show us how many tasks were currently completed and incomplete. To find that out you had to squint at the task list. As a result we were often suprised at the end of the iteration.

2) We had no way to prioritize tasks on the task list. Developers simply claimed a new task at random when they were available. As a result, often we would finish an iteration with important tasks incomplete but less important tasks completed.

3) In the daily scrum, any given developer would usually only mention that he was blocked if he couldn't make any progress at all. He would not mention if a task he was responsible for was blocked, as long as there were other tasks we could work on. Because of this, blockages would often go unresolved.

Here's how we solved these problems: We divided our task wall into three columns: Available, Started, and Completed, so we could tell at a glance how many tasks remained incomplete. We divided the Available column into three sections based on priority: High, Medium, and Low, to keep us from starting low priority tasks when higher priority tasks were available. Finally, we put bright yellow sticky notes on task cards that were blocked. At a glance, we can now tell how many tasks are currently blocked, and can take action if we spot dangerous trends (like most of the available high priority tasks being blocked). The visibility the task wall gives us makes it very easy to realize when we're heading for trouble while we still have plenty of time to do something about it, rather than at the end of the iteration.

This experience reveals a dangerous pitfall that Scrum projects can fall into when not supported by XP practices. The daily scrum iterates focuses on each individual in the team. The burndown shows one view of overall progress. Both make it easy to neglect the actual tasks that need to be completed to make an iteration a success.

I'm eager to hear the opinions and perspectives of others who have used Scrum. Do you support Scrum with XP practices (or vice versa)? Have you encountered this same pitfall? Are there other pitfalls I should keep an eye out for (I've got a few others in mind)?

Why was there such a big difference? I'll get to that in a minute, but first, a little intro to Scrum:

For those not familiar with Scrum, the daily scrum is stand-up meeting where each participant answers three questions:

1) What did you do yesterday?

2) What will you do today?

3) What is blocking progress?

It is intended to allow the team to work together to remove obstacles blocking progress and keep momentum during the iteration.

The burndown is a graph of the amount of estimated work remaining over the course of an iteration. The days of the iteration are written on the x axis, and the amount of remaining work is measured on the y axis. Each day, a new data point is added to the graph. The amount of work remaining is calculated from an accompanying list of tasks and time estimates. The time estimate for each task is updated daily.

We were getting very little value from these two tools. But why?

As I suspect is the case in many large organizations adopting Scrum for the first time, our Scrum had degenerated into a status report rather than a tool to resolve problems and increase productivity. We often forgot to mention what we would be working on in the upcoming day, and hardly anyone ever admitted to being blocked. Most of the time, we just reported what we had been working on.

As for the burndown, we had developed a habit of ending every iteration with only slight slippage from the 'ideal burndown'. Here's an example of what our burndown graphs almost always looked like:

The blue line is an ideal burndown. Notice it reaches zero at the end of the iteration. The red line is our typical burndown. Doesn't look too bad, right? The problem was, even though there weren't many hours left on the burndown at the end of each iteration, there would be lots of incomplete tasks. We would always have a lot of tasks "almost done" and very few actually done.

The blue line is an ideal burndown. Notice it reaches zero at the end of the iteration. The red line is our typical burndown. Doesn't look too bad, right? The problem was, even though there weren't many hours left on the burndown at the end of each iteration, there would be lots of incomplete tasks. We would always have a lot of tasks "almost done" and very few actually done.There were three main deficiencies in our current process that caused this problem:

1) The burndown was deceiving. It didn't show us how many tasks were currently completed and incomplete. To find that out you had to squint at the task list. As a result we were often suprised at the end of the iteration.

2) We had no way to prioritize tasks on the task list. Developers simply claimed a new task at random when they were available. As a result, often we would finish an iteration with important tasks incomplete but less important tasks completed.

3) In the daily scrum, any given developer would usually only mention that he was blocked if he couldn't make any progress at all. He would not mention if a task he was responsible for was blocked, as long as there were other tasks we could work on. Because of this, blockages would often go unresolved.

Here's how we solved these problems: We divided our task wall into three columns: Available, Started, and Completed, so we could tell at a glance how many tasks remained incomplete. We divided the Available column into three sections based on priority: High, Medium, and Low, to keep us from starting low priority tasks when higher priority tasks were available. Finally, we put bright yellow sticky notes on task cards that were blocked. At a glance, we can now tell how many tasks are currently blocked, and can take action if we spot dangerous trends (like most of the available high priority tasks being blocked). The visibility the task wall gives us makes it very easy to realize when we're heading for trouble while we still have plenty of time to do something about it, rather than at the end of the iteration.

This experience reveals a dangerous pitfall that Scrum projects can fall into when not supported by XP practices. The daily scrum iterates focuses on each individual in the team. The burndown shows one view of overall progress. Both make it easy to neglect the actual tasks that need to be completed to make an iteration a success.

I'm eager to hear the opinions and perspectives of others who have used Scrum. Do you support Scrum with XP practices (or vice versa)? Have you encountered this same pitfall? Are there other pitfalls I should keep an eye out for (I've got a few others in mind)?

Wednesday, September 20, 2006

Subscribe to:

Posts (Atom)